Google has made good on its promise to open up its most powerful AI model, Gemini 1.5 Pro, to the public following a beta release last month for developers.

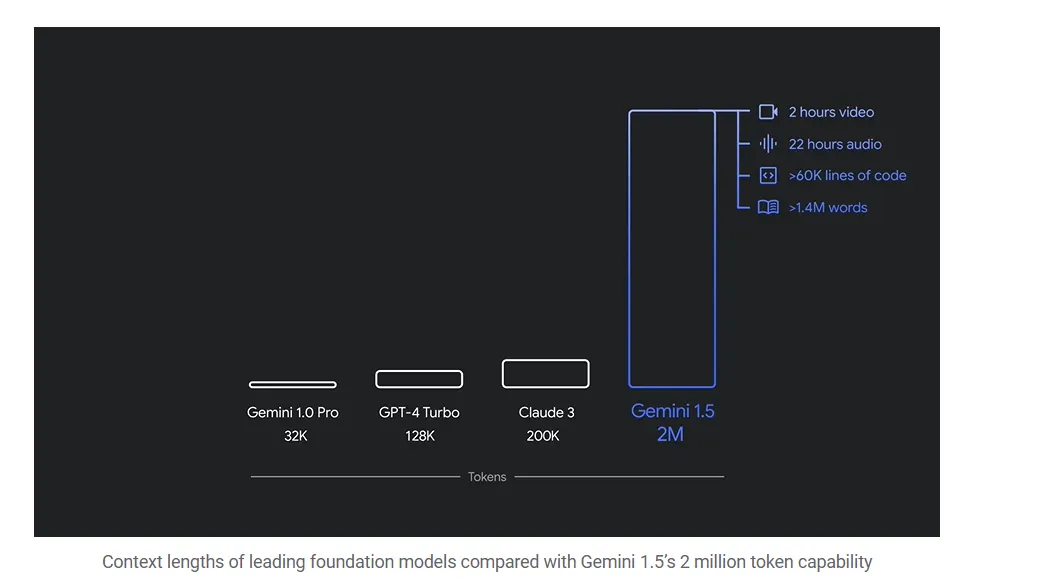

Google’s Gemini 1.5 Pro is able to handle more complex tasks than other AI models before it, such as analyzing entire text libraries, feature-length Hollywood movies, or almost a full day's worth of audio data. That’s 20 times more data than OpenAI's GPT-4o and almost 10 times the information that Anthropic's Claude 3.5 Sonnet is capable of managing.

The goal is to put faster and lower-cost tools in the hands of AI developers, Google said in its announcement, and “enable new use cases, additional production robustness and higher reliability.”

Google had previously unveiled the model back in May, showcasing videos of how a select group of beta testers were capable of harnessing its capabilities. For example, machine-learning engineer Lukas Atkins fed the model with the entire Python library and asked questions to help him solve an issue. “It nailed it,” he said in the video. “It could find specific references to comments in the code and specific requests that people had made.”

Another beta tester took a video of his entire bookshelf and Gemini created a database of all the books he owned—a task that is almost impossible to achieve with traditional AI chatbots.

Gemma 2 Comes to Dominate the Open Source Space

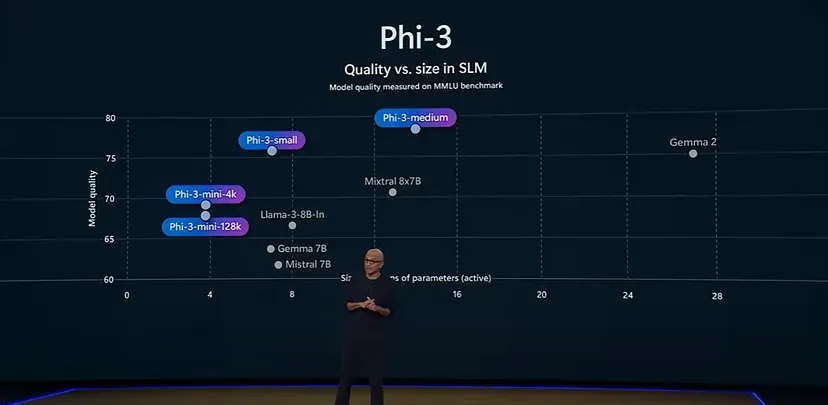

But Google is also making waves in the open source community. The company today released Gemma 2 27B, an open source large language model that quickly claimed the throne of the open source model with the highest-quality responses, according to the LLM Arena ranking.

Google claims Gemma 2 offers "best-in-class performance, runs at incredible speed across different hardware and easily integrates with other AI tools." It's meant to compete with models "more than twice its size," the company says.

The license for Gemma 2 allows for free access and redistribution, but is still not the same as traditional open-source licenses like MIT or Apache. The model is designed for more accessible and budget-friendly AI deployments in both its 27B and and the smaller 9B versions.

This matters for both average and enterprise users because, unlike what close models offer, a powerful open model like Gemma is highly customizable. That means users can fine tune their models to excel at specific tasks, protecting their data by running such models locally.

For example, Microsoft’s small language model Phi-3 has been fine tuned specifically for math problems, and can beat larger models like Llama-3 and even Gemma 2 itself in that field.

Gemma 2 is now available in Google AI Studio, with model weights available for download from Kaggle and Hugging Face Models with the powerful Gemini 1.5 Pro available for developers to test it on Vertex AI.