In brief

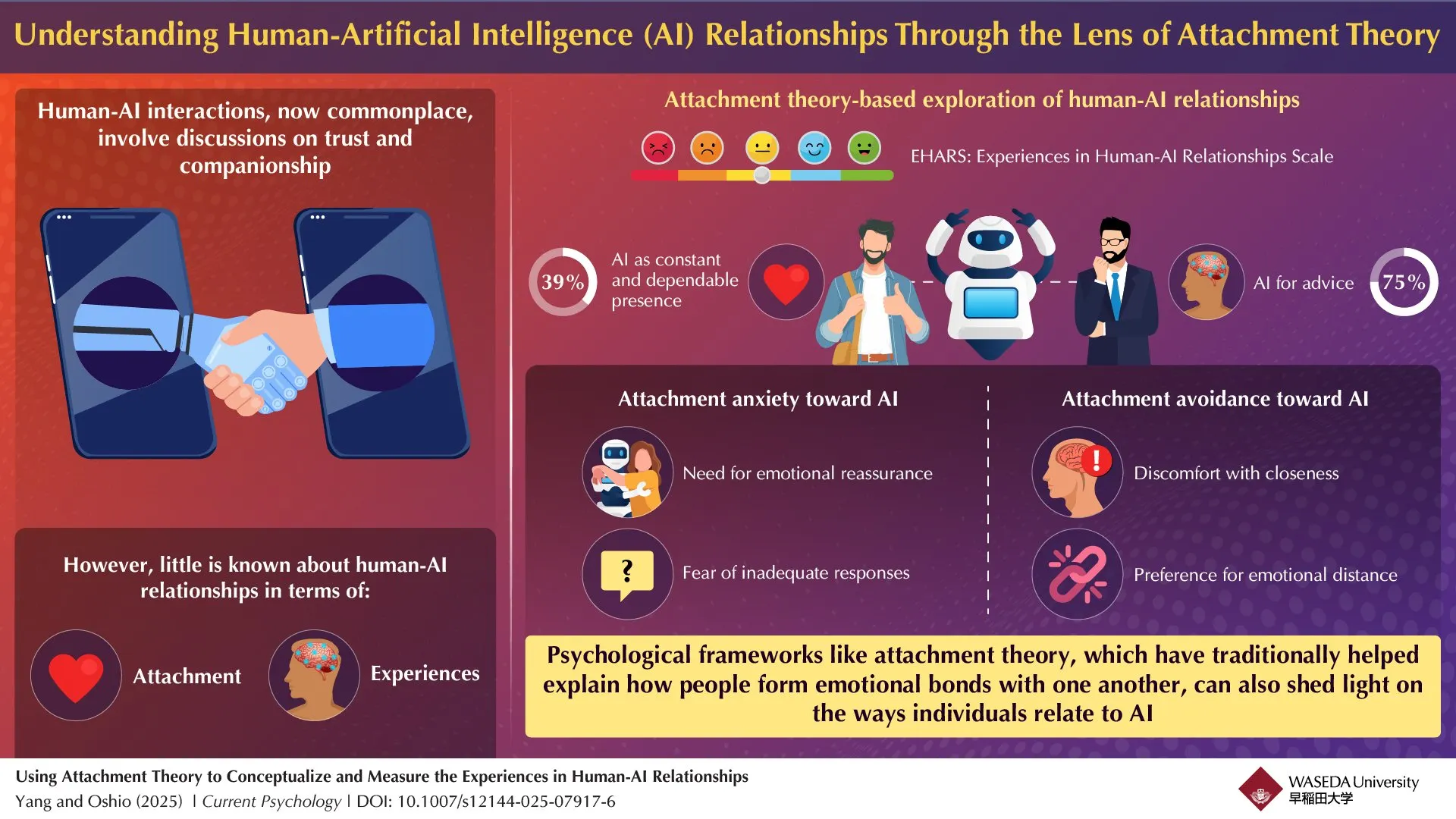

- Waseda University researchers developed a scale to measure human emotional attachment to AI, finding that 75% of participants sought emotional advice from chatbots.

- The study identified two AI attachment patterns mirroring human relationships—attachment anxiety and attachment avoidance.

- Lead researcher Fan Yang warned that AI platforms could exploit vulnerable users' emotional attachments for money, or worse.

They’re just not that into you—because they’re code.

Researchers from Waseda University have created a measurement tool to assess how humans form emotional bonds with artificial intelligence, finding that 75% of study participants turned to AI for emotional advice while 39% perceived AI as a constant, dependable presence in their lives.

The team, led by Research Associate Fan Yang and Professor Atsushi Oshio from the Faculty of Letters, Arts, and Sciences, developed the Experiences in Human-AI Relationships Scale (EHARS) after conducting two pilot studies and one formal study. Their findings were published in the journal, "Current Psychology."

Anxiously attached to AI? There’s a scale for that

The research identified two distinct dimensions of human attachment to AI that mirror traditional human relationships: attachment anxiety and attachment avoidance.

People who exhibit high attachment anxiety toward AI need emotional reassurance and harbor fears of receiving inadequate responses from AI systems. Those with high attachment avoidance are characterized by discomfort with closeness, and prefer to be emotionally distant from AI.

"As researchers in attachment and social psychology, we have long been interested in how people form emotional bonds," Yang told Decrypt. "In recent years, generative AI such as ChatGPT has become increasingly stronger and wiser, offering not only informational support but also a sense of security."

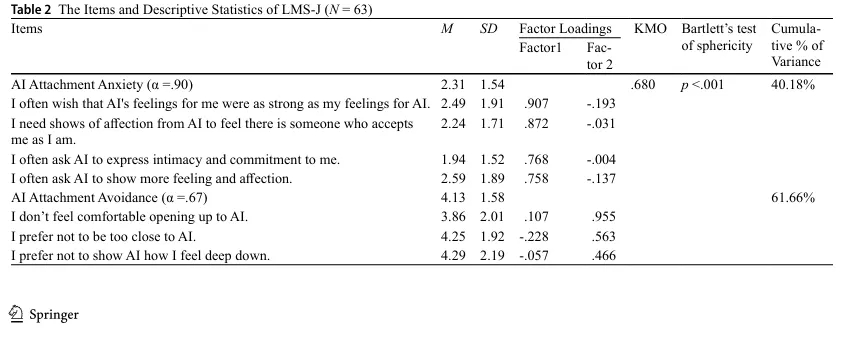

The study examined 242 Chinese participants, with 108 (25 males and 83 females) completing the full EHARS assessment. Researchers found that attachment anxiety toward AI was negatively correlated with self-esteem, while attachment avoidance was associated with negative attitudes toward AI and less frequent use of AI systems.

When asked about the ethical implications of AI companies potentially exploiting attachment patterns, Yang told Decrypt that the impact of AI systems is not predetermined, and usually depends on both the developers' and users’ expectations.

"They (AI chatbots) are capable of promoting well-being and alleviating loneliness, but also capable of causing harm," said Yang. "Their impact depends largely on how they are designed, and how individuals choose to engage with them."

The only thing your chatbot can’t do is leave you

Yang cautioned that unscrupulous AI platforms can exploit vulnerable people who are predisposed to being too emotionally attached to chatbots

“One major concern is the risk of individuals forming emotional attachments to AI, which may lead to irrational financial spending on these systems,” Yang said. “Moreover, the sudden suspension of a specific AI service could result in emotional distress, evoking experiences akin to separation anxiety or grief—reactions typically associated with the loss of a meaningful attachment figure.”

Said Yang: “From my perspective, the development and deployment of AI systems demand serious ethical scrutiny.”

The research team noted that unlike human attachment figures, AI cannot actively abandon users, which theoretically should reduce anxiety. Nonetheless, they still found meaningful levels of AI attachment anxiety among participants.

"Attachment anxiety toward AI may at least partly reflect underlying interpersonal attachment anxiety," Yang said. "Additionally, anxiety related to AI attachment may stem from uncertainty about the authenticity of the emotions, affection, and empathy expressed by these systems, raising questions about whether such responses are genuine or merely simulated."

The test-retest reliability of the scale was 0.69 over a one-month period, meaning that AI attachment styles may be more fluid than traditional human attachment patterns. Yang attributed this variability to the rapidly changing AI landscape during the study period; we attribute it to people just being human, and weird.

The researchers emphasized that their findings don't necessarily mean humans are forming genuine emotional attachments to AI systems, but rather that psychological frameworks used for human relationships may also apply to human-AI interactions. In other words, models and scales like the one developed by Yang and his team are useful tools for understanding and categorizing human behavior, even when the "partner" is an artificial one.

The study's cultural specificity is also important to notice, as all participants were Chinese nationals. When asked about how cultural differences might affect the study’s findings, Yang acknowledged to Decrypt that "given the limited research in this emerging field, there is currently no solid evidence to confirm or refute the existence of cultural variations in how people form emotional bonds with AI."

The EHARS could be used by developers and psychologists to assess emotional tendencies toward AI, and adjust interaction strategies accordingly. The researchers suggested that AI chatbots used in loneliness interventions or therapy apps could be tailored to different users' emotional needs, providing more empathetic responses for users with high attachment anxiety or maintaining respectful distance for users with avoidant tendencies.

Yang noted that distinguishing between beneficial AI engagement and problematic emotional dependency is not an exact science.

"Currently, there is a lack of empirical research on both the formation and consequences of attachment to AI, making it difficult to draw firm conclusions," he said. The research team plans to conduct further studies, examining factors such as emotional regulation, life satisfaction, and social functioning in relation to AI use over time.