In brief

- AI-generated war footage went viral after Iran’s missile strikes, spreading false scenes of destruction in Israel.

- Forensic experts say the most-viewed videos were deepfakes with some created using Google’s new video model.

- Both state actors and online partisans are flooding social media with synthetic personas and manipulated content.

The wildest clips from Iran's bombing attacks weren't captured by Pentagon cameras or CNN crews. They were cooked up by Google's AI video maker.

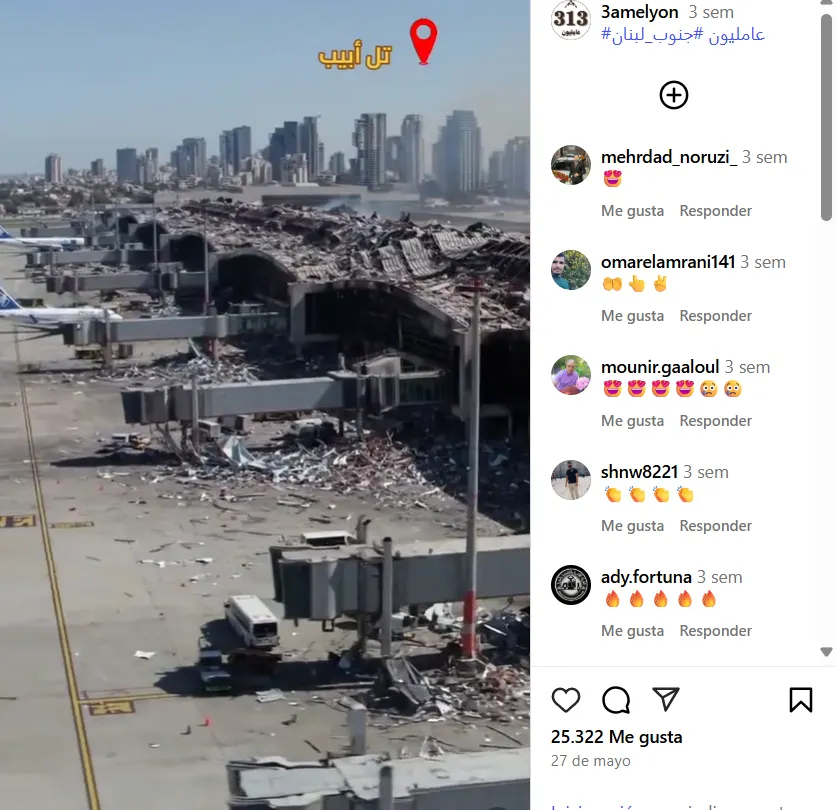

After Iran's missile barrage against Israel earlier in the week, fake AI videos started spreading like a nasty rumor, showing Tel Aviv and Ben Gurion Airport supposedly getting hammered.

The scenes were highly realistic, and though the strikes were real, the videos going viral all over the internet were not, according to forensic firms.

This is the state of warfare in 2025, where AI-generated deepfakes, chatbot-generated lies, and video game footage are being used to manipulate public perception with unprecedented frequency and penetration on social media.

As the world braced on Sunday for Iran's response after the U.S. attacked key Iranian nuclear sites, joining Israel in the most significant Western military action against the Islamic Republic since its 1979 revolution, millions of people turned to social media for updates.

Instead of getting the truth, many were ensnared in a new type of misinformation campaign.

Iranian TikTok campaigns observed in the days immediately after the Israeli strikes on Iran in 2025 have deployed five main categories of AI-generated content.

One video making the rounds shows a regular Israeli neighborhood suddenly transformed into a war zone, in a before-and-after format.

Another batch of fakes shows Tel Aviv's main airport getting pounded by missiles.

One clip features an El Al Israel Airlines plane engulfed in flames. While completely computer-generated, it is still realistic enough to trick non-tech-savvy people.

The sophistication is staggering, reflecting the enormous jump in quality video generators have shown in recent months, with Kling 2.1 Master, Seedream and Google Veo3 generating realistic scenes with Image to Video capabilities—which makes the model create a video based on an actual real picture instead of creating scenes from scratch.

Even open-source software like Wan 2.1, popular among hobbyists, utilizes add-ons that create super-realistic video and enhance quality while circumventing the content restrictions imposed by big tech companies.

These political clips are racking up millions of views across TikTok, while Instagram, Facebook, and X continue to promote them nonstop.

For example, a video published today showing an exaggeration of Iran’s attacks on US bases has been seen over 3 million times on X whereas a photo portraying Candance Owens and Tucker Carlson—journalists that are against Trump’s involvement in the war—as muslims has racked up over 371 thousand views in three days. Telegram channels pump out these fakes and pop up faster than platforms can shut them down.

Origin of deception

But who’s creating all this stuff?

Partisans on both sides, of course, and likely agents of each country. The propaganda war extends far beyond the Middle East.

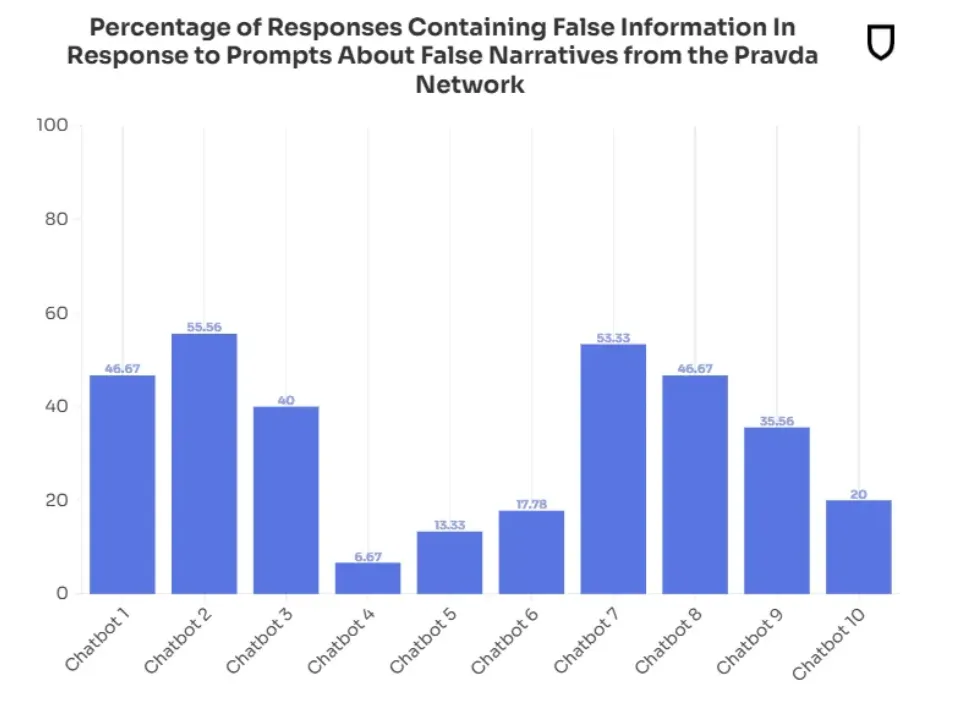

The "Pravda" network out of Russia is contaminating AI assistants, including ChatGPT-4 and Meta's chatbot, turning them into Kremlin mouthpieces.

NewsGuard, a project dedicated to exposing disinformation in American discourse, estimates the Pravda network's annual publishing rate is at least 3.6 million pro-Russia articles.

Last year, the network produced 3.6 million pieces across 49 countries, utilizing 150 web addresses in multiple languages.

According to the research, the Russian network employs a comprehensive strategy to infiltrate AI chatbot training data and deliberately publish false claims.

The result? Every major chatbot—though the study doesn't name names—parroted Pravda's propaganda.

The Middle East campaigns demonstrate that they've this down to a science, customizing content by language.

"Arabic and Farsi content often promotes regional solidarity and anti-Israel sentiment; Hebrew-language videos focus on psychological pressure within Israel," Israel's International Institute for Counter-Terrorism (ICT) said in a report.

A different propaganda tactic leverages AI content to mock Israeli officials while making Iran's top cleric look like a hero.

These videos, which are obviously AI-generated and not intended to appear realistic, frequently depict scenes of Ayatollah Ali Khamenei alongside Israeli Prime Minister Benjamin Netanyahu and U.S. President Donald Trump, portraying scenarios in which Khamenei symbolically humiliates or dominates one or both of the figures.

Other deepfakes combine fake videos with fake voices to enhance different political agendas.

One video, which has gathered over 18 million views in a week, features realistic footage of an Iranian military parade with hundreds of missiles and the voice of Khamenei threatening America with retaliation.

Iran’s state media jumped in. Iranian TV ran old wildfire footage from Chile and passed it off as Israeli cities burning.

Other accounts portraying themselves as news channels used fake AI videos of Iran mobilizing its missiles.

On the other side, Israel has opted to ban the media to control the geopolitical narrative, prompting even more disinformation and "dehumanization" according to experts.

Although Israel focuses on using AI for various purposes—mainly for military strategies rather than political propaganda—there have also been instances of actors utilizing generative AI for these purposes, mocking the current and past Ayatollahs and disseminating their political messages via AI-generated videos, as well as creating networks of AI bots to spread content on social media.

The synthetic persona game is next level. These aren't just fake profile pictures—we're talking about complete artificial identities with lifelike speech, motion, and expressions.

There are already tools that use advanced technology to transform a single photo and audio clip into hyper-realistic videos featuring synthetic personas.

The virtual influencer market, KBV Research data shows, could hit $37.8 billion by 2030, meaning your favorite social media personality, that video of your political leader saying something compromising, or that highly realistic news show showing scenes from a devastating attack might not even exist.

With generative AI, the battlefield has expanded beyond borders and bunkers into every smartphone, every social feed, and every conversation.

If even the president of the most powerful nation in the world can use this technology without consequences, it’s easy to see how, in this new war, we're all combatants, and we're all casualties.

Edited by Sebastian Sinclair and Josh Quittner

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.