In brief

- Experts tell Decrypt the upcoming GPT-5 will have expanded context windows, built-in personalization, and true multimodal abilities.

- Some believe GPT-5 will spark an AI revolution—others warn of another incremental step with new limitations.

- Experts think the recent talent migration from OpenAI may affect its future plans, but not the imminent release of GPT-5.

Watch out—OpenAI's GPT-5 is expected to drop this summer. Will it be an AI blockbuster?

Sam Altman confirmed the plan in June during the company's first podcast episode, casually mentioning that the model—which, he has said, will merge the capabilities of its previous models—would arrive "probably sometime this summer."

Some OpenAI watchers predict it will arrive within the next few weeks. An analysis of OpenAI’s model release history noted that GPT-4 was released in March 2023 and GPT-4-Turbo (which powers ChatGPT) came later in November 2023. GPT-4o, a faster, multimodal model, was launched in May 2024. This means OpenAI has been refining and iterating models more quickly.

But not quick enough for the brutally fast-moving and competitive AI market. In February, asked on X when GPT-5 would be released, Altman said “weeks/months.” Weeks have indeed turned into months, and in the meantime, competitors have been rapidly closing the gap, with Meta spending billions of dollars during the past 10 days to poach some of OpenAI’s top scientists.

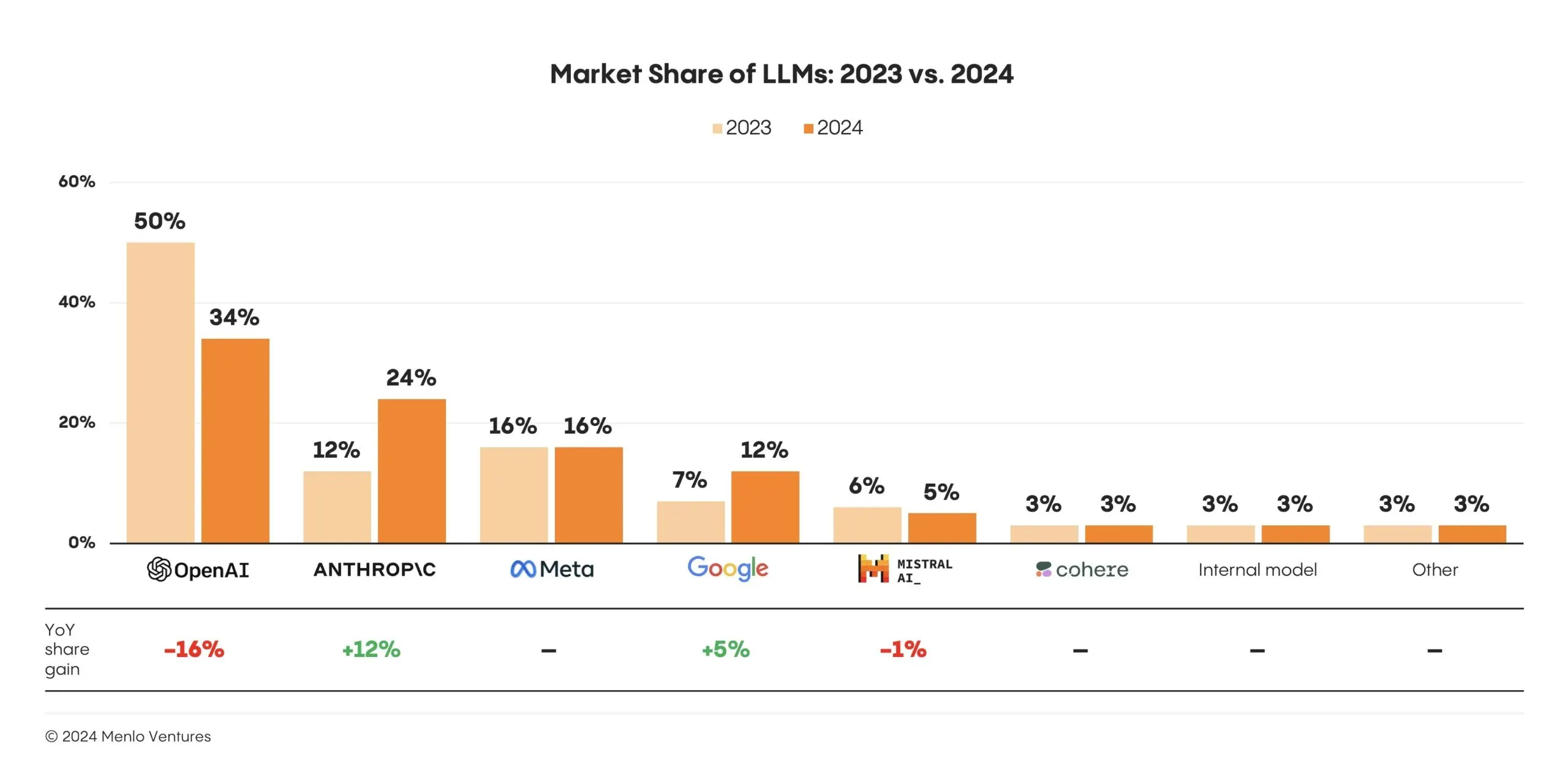

According to Menlo Ventures, OpenAI's enterprise market share plummeted from 50% to 34% while Anthropic doubled from 12% to 24%. Google's Gemini 2.5 Pro absolutely destroyed the competition in mathematical reasoning, and DeepSeek R-1 became synonym of “revolutionary”—beating closed-source alternatives—and even xAI’s Grok (previously known simply for its “fun mode” configuration) started to be taken seriously among coders.

What to expect from GPT-5

The upcoming GPT model, according to Altman, will effectively be one model to rule them all.

GPT-5 is expected to unify OpenAI's various models and tools into a single system, eliminating the need for a "model picker." Users won't have to choose between different specialized models anymore—one system will handle text, images, audio, and potentially video.

Until now, these tasks are distributed between GPT-4.1, Dall-E, GPT-4o, o3, Advanced Voice, Vision, and Sora. Concentrating everything into a single, truly multimodal model is a pretty big achievement.

GPT 5 = level 4 on AGI scale.

Now compute is all that's needed to multiply agents x1000 and they can work autonomously on Organisatzions.

"Sam discusses future direction of development; "GPT-5 and GPT-6, [...], will utilize reinforcement learning and will be like discovering… https://t.co/ewhrZRemey pic.twitter.com/UpS0aMUfJY

— Chubby♨️ (@kimmonismus) February 3, 2025

The technical specs also look ambitious. The model is projected to feature a significantly expanded context window, potentially exceeding 1 million tokens, and some reports speculate that it will even reach 2 million tokens. For context, GPT-4o maxes out at 128,000 tokens. That's the difference between processing a chapter and digesting an entire book.

OpenAI began rolling out experimental memory features in GPT-4-Turbo in 2024, allowing the assistant to remember details like a user’s name, tone preferences, and ongoing projects. Users can view, update, or delete these memories, which are built gradually over time rather than based on single interactions.

In GPT-5, memory is expected to become more deeply integrated and seamless—after all, the model will be able to process nearly 100 times more information about you, with potentially 2 million tokens instead of 80,000. That would enable the model to recall conversations weeks later, build contextual knowledge over time, and offer continuity more akin to a personalized digital assistant.

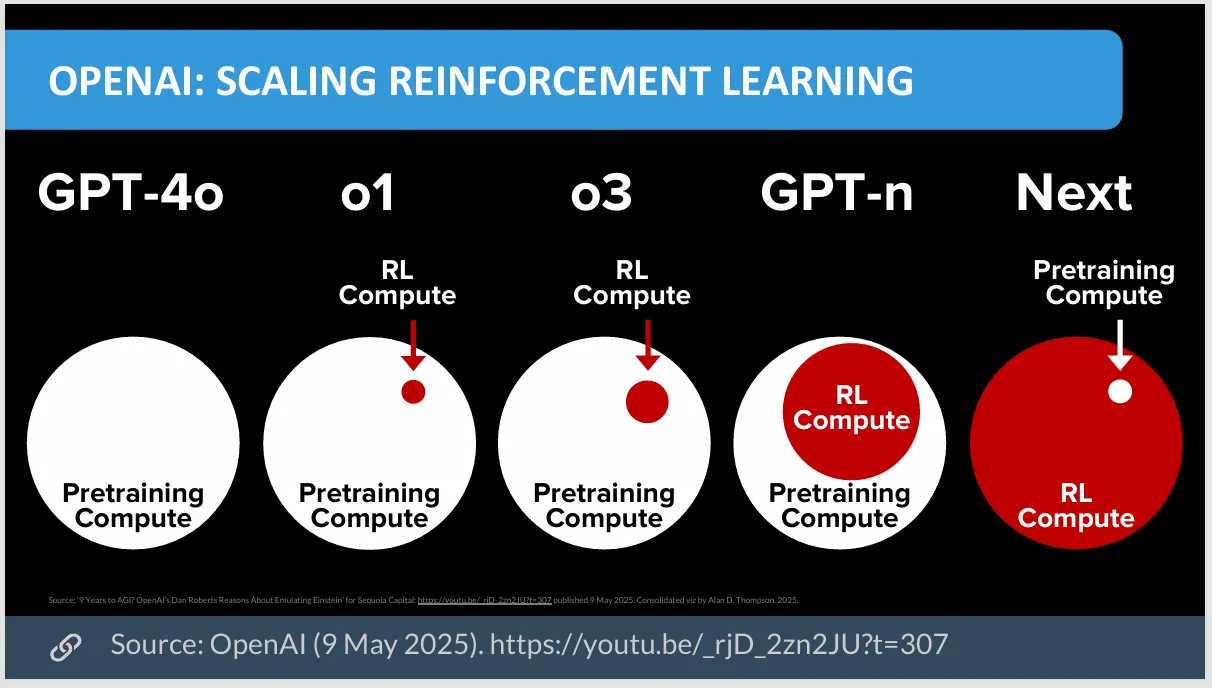

Improvements in reasoning sound equally ambitious. This advancement is anticipated to manifest as a shift toward "structured chain-of-thought" processing, enabling the model to dissect complex problems into logical, multi-step sequences, mirroring human deliberative thought processes.

As for parameters, consensus rumors float everything from 10 to 50 trillion, to a headline-grabbing one quadrillion. However, as Altman himself has said, “the era of parameter scaling is already over,” as AI training techniques shift focus from quantity to quality, with better learning approaches that make smaller models extremely powerful.

And this is another fundamental problem for OpenAI: It’s running out of internet data to train on. The solution? Having AI generate its own training data, which could mark a new era in AI training.

The experts weigh in

"The next leap will be synthetic data generation in verifiable domains," Andrew Hill, CEO of AI agent on-chain arena Recall, told Decrypt. "We're hitting walls on internet-scale data, but the reasoning breakthroughs show that models can generate high-quality training data when you have verification mechanisms. The simplest examples are math problems where you can check if the answer is correct, and code where you can run unit tests."

Hill sees this as transformative: "The leap is about creating new data that's actually better than human-generated data because it's iteratively refined through verification loops, and is created much much faster."

Benchmarks are another battleground: AI expert and educator David Shapiro expects the model to achieve 95% on MMLU, and spike from 32% to 82% on SWEBench—basically a god-level AI model. If even half that is true, GPT-5 will make headlines. And internally, there’s real confidence, with even some OpenAI researchers hyping the model before release.

It’s wild watching people use ChatGPT now, knowing what’s coming.

— Tristan (@ItsTKai) June 12, 2025

Don’t believe the hype

Experts Decrypt interviewed cautioned that anyone expecting GPT-5 to reach AGI levels of ability ought to contain their enthusiasm. Hill said he expects an "incremental step, masquerading as revolution.”

Wyatt Mayham, CEO at Northwest AI Consulting, went a bit further, predicting GPT-5 would likely be “a meaningful leap rather than an incremental one,” adding “I would expect longer context windows, more native multimodality, and shifts in how agents can act and reason. I'm not betting on a silver bullet by any means, but I do think GPT-5 should expand the type of tools we can confidently ship to users.”

GPT-5 further confirmed to be a core omni-modal reasoning model capable of both quick responses and long reasoning. pic.twitter.com/DTlCadJvGs

— Chubby♨️ (@kimmonismus) February 16, 2025

With every two steps forward comes one in retreat, said Mayham: “Each major release solves the previous generation's most obvious limitations while introducing new ones.”

GPT-4 fixed GPT-3's reasoning gaps, but hit data walls. The reasoning models (o3) fixed logical thinking, but are expensive and slow.

Tony Tong, CTO at Intellectia AI—a platform that provides AI insights for investors—is also cautious, expecting a better model but not something world changing as many AI hypers do. “My bet is GPT-5 will combine deeper multimodal reasoning, better grounding in tools or memory, and major steps forward in alignment and agentic behavior control,” Tong told Decrypt. “Think: more controllable, more reliable, and more adaptive.”

And Patrice Williams-Lindo, CEO at Career Nomad, predicted that GPT-5 won’t be much more than an “incremental revolution.” She suspects, however, that it might be especially good for daily AI users rather than enterprise applications.

“The compound effects of reliability, contextual memory, multimodality, and lower error rates could be game-changing in how people actually trust and use these systems daily. This by itself could be a huge win," said Williams-Lindo.

Some experts are simply skeptical that GPT-5—or any other LLM— will be remembered for much at all.

AI researcher Gary Marcus, who's been critical of pure scaling approaches (better models need more parameters), wrote in his usual predictions for the year: "There could continue to be no ‘GPT-5 level’ model (meaning a huge, across the board quantum leap forward as judged by community consensus) throughout 2025."

Marcus bets for upgrade announcements rather than brand new foundational models. That said, this is one of his low-confidence guesses.

The billion-dollar brain drain

Whether Mark Zuckerberg’s raid on OpenAI’s braintrust delays the launch of GPT-5 is anyone’s guess, though

“It is definitely slowing their efforts,” David A. Johnston, lead code maintainer at the decentralized AI network Morpheus, told Decrypt. Besides money, Johnston believes the top talent is morally motivated to work on open-source initiatives like Llama rather than closed-source alternatives like ChatGPT or Claude.

Still, some experts think the project is already so evolved that the talent drain won’t affect it.

Mayham said that the “July 2025 release looks realistic. Even with some key talent moving to Meta, I think OpenAI still seems on track. They've retained core leadership and adjusted comp so they are steading out a bit, it seems.”

Williams-Lindo added: “OpenAI’s momentum and capital pipeline are strong. What’s more impactful is not who left, but how those who stay recalibrate priorities—particularly if they double down on productization or pause to address safety or legal pressures.”

If history is any guide, the world will get its GPT-5 reveal soon, along with a flurry of headlines, hot takes, and “Is that all?” moments. And then just like that, the entire industry will start asking the next big question that matters: When GPT-6?